Have you ever wondered how machines learn from data and make decisions? Neural networks are the backbone of modern artificial intelligence and have revolutionized how we approach complex problems. In this discussion, let’s unravel the basics of neural networks, focusing mainly on the perceptron and the multilayer perceptron (MLP).

What Are Neural Networks?

Neural networks are a group of algorithms designed to recognize patterns. They interpret sensory data through a kind of machine perception, labeling, and clustering of raw input. Inspired by the human brain, these networks consist of interconnected neurons that communicate in order to process information. If you think of a neural network as a web of interconnected nodes, you are on the right track.

Basic Components of Neural Networks

Before diving deeper into the perceptron and multilayer perceptron, it’s essential to understand the main components that make up a neural network.

- Neurons: The basic units of a neural network. Each neuron receives inputs, processes them, and produces an output.

- Weights: Each connection between neurons has an associated weight, which determines the importance of that input.

- Activation Function: After a neuron processes inputs, it uses an activation function to decide whether to pass its output to the next layer.

The behavior of the neural network is influenced by these components, as it tries to mimic the way human brains work.

The Perceptron: A Building Block of Neural Networks

The perceptron is the simplest form of a neural network model. Introduced in 1958 by Frank Rosenblatt, it was designed for binary classification tasks. Understanding the perceptron lays the foundation for more complex neural networks.

Structure of a Perceptron

A perceptron consists of:

- Inputs: The data fed into the network.

- Weights: Adjusted during training to improve predictions.

- Bias: An additional parameter that helps adjust the output along with the weighted sum of inputs.

Mathematical Representation

The overall output of a perceptron can be represented by the function:

[ y = f\left( \sum (w_i \cdot x_i) + b \right) ]

Where:

- (y) is the output,

- (f) is the activation function,

- (w_i) is the weight of each input,

- (x_i) is each input,

- (b) is the bias.

This simple equation highlights how inputs are combined and transformed to produce an output.

Activation Function

The activation function determines whether a neuron should be activated or not. The most commonly used activation functions include:

- Step Function: Activates the neuron if the input exceeds a certain threshold.

- Sigmoid Function: Produces an output between 0 and 1, capturing non-linear relationships.

- ReLU (Rectified Linear Unit): Outputs the input directly if it is positive; otherwise, it outputs zero.

Limitations of the Perceptron

While the perceptron is foundational, it has limitations, especially with complex data. It can only classify linearly separable data, meaning it can’t solve problems that require the separation of classes in non-linear ways. This limitation led to the evolution of more advanced architectures, such as the multilayer perceptron.

The Multilayer Perceptron (MLP)

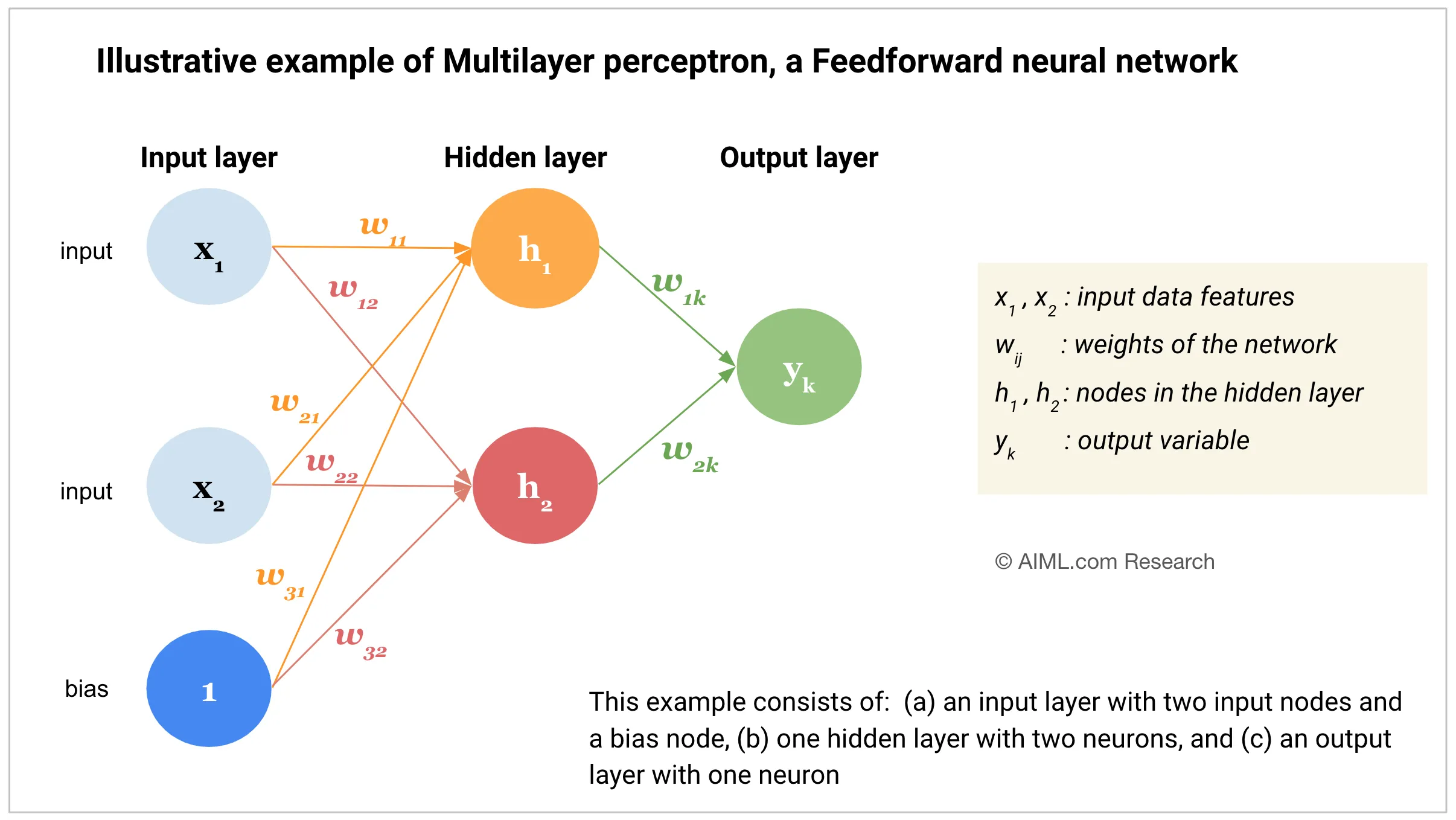

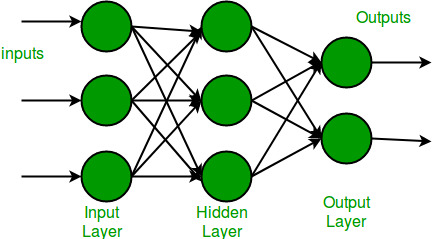

As the name suggests, a multilayer perceptron consists of multiple layers of neurons. This enhancement allows the network to learn complex patterns in data.

Structure of an MLP

An MLP comprises:

- Input Layer: The first layer, where data is fed into the network.

- Hidden Layers: One or more layers where computations occur. More hidden layers enable the network to learn more complex patterns.

- Output Layer: The final layer that produces the predictions.

Feedforward Mechanism

In an MLP, data flows in one direction—from the input layer to the output layer. This mechanism is called feedforward, as information moves forward while training the model. Each neuron applies a weight and activation function to its inputs, producing outputs that become inputs for the next layer.

Backpropagation: Learning from Mistakes

One of the critical learning mechanisms in an MLP is called backpropagation. This method allows the neural network to adjust its weights based on the error from predictions. By effectively reversing the flow of information, backpropagation calculates gradients that guide weight adjustments, improving the model’s accuracy.

- Forward Pass: Calculate the output by passing the inputs through the network.

- Calculate Loss: Evaluate how far the prediction is from the actual result.

- Backward Pass: Compute the gradients and update the weights.

The Importance of Activation Functions in MLPs

Similar to perceptrons, MLPs rely heavily on activation functions. However, MLPs can utilize several activation functions across different layers. Here are common choices for hidden and output layers:

| Layer Type | Activation Function |

|---|---|

| Hidden Layers | ReLU, Tanh, Sigmoid |

| Output Layer (Binary) | Sigmoid |

| Output Layer (Multiclass) | Softmax |

Limitations of MLPs

While MLPs are powerful, they come with challenges, such as:

- Overfitting: The model may learn the noise in the training data, leading to poor generalization.

- Large Data Requirements: MLPs need significant data for training to achieve good generalization.

- Training Time: MLPs can take a long time to train, especially with many layers and complex data.

Comparison: Perceptron vs. Multilayer Perceptron

Understanding the differences between a perceptron and an MLP can help clarify when to use each type of model.

| Feature | Perceptron | Multilayer Perceptron |

|---|---|---|

| Layers | Single Layer | Multiple Layers |

| Capability | Linearly separable data | Complex, non-linear patterns |

| Number of Neurons | One per input | Multiple per layer |

| Training Algorithm | Simple perceptron learning | Backpropagation |

| Use Cases | Basic binary classification | Advanced tasks like image recognition, language processing |

Applications of Neural Networks

Neural networks, particularly MLPs, have transformed several fields. Here are some prominent applications:

Image Recognition

In this domain, neural networks can identify objects, faces, and features within images. By utilizing multiple layers, MLPs are capable of discerning intricate patterns and details, making them invaluable for tasks like facial recognition.

Natural Language Processing (NLP)

MLPs help machines understand human languages. Whether translating text or responding to queries, neural networks are pivotal in creating applications like chatbots or voice assistants.

Medical Diagnosis

By analyzing patient data, neural networks can assist healthcare professionals in diagnosing conditions. The ability to uncover patterns that may not be immediately obvious aids clinicians in making informed decisions.

The Future of Neural Networks

As AI continues to advance, the future of neural networks looks promising. Researchers are constantly developing new architectures and methods to enhance learning and processing capabilities. Innovations like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are just examples of how far the field has evolved.

Evolving Techniques and Trends

- Transfer Learning: Utilizes pre-trained models to adapt to new tasks quickly, reducing training time.

- Neural Architecture Search (NAS): Automates the design of neural networks to optimize performance efficiently.

Getting Started with Neural Networks

If you’re interested in building your own neural networks, here’s a simplified guide to help you begin:

Choosing a Programming Language

Python is the most popular choice for developing neural networks, thanks to its simplicity and the extensive libraries available.

Libraries to Consider

- TensorFlow: A powerful library developed by Google that enables building and training neural networks with ease.

- Keras: A user-friendly interface for TensorFlow, making it accessible for beginners.

- PyTorch: An innovative library that offers flexibility and dynamic computation.

Learning Resources

- Books: Exploring books such as “Deep Learning” by Ian Goodfellow is an excellent way to deepen your understanding.

- Online Courses: Platforms like Coursera and edX offer courses covering neural network fundamentals and applications.

Building Your First Neural Network

- Prepare Your Data: Clean and preprocess your dataset.

- Define Your Model: Decide on the architecture—single-layer or multilayer.

- Compile the Model: Choose a loss function and optimizer.

- Train the Model: Fit your model to the training data.

- Evaluate the Results: Test accuracy and tune parameters as needed.

Conclusion

Neural networks, particularly the perceptron and multilayer perceptron, have laid the groundwork for advancements in artificial intelligence. While the perceptron provides essential insights into binary classifications, the multilayer perceptron opens the gateway to solving more complex problems.

As you continue your journey in the world of neural networks, remember the value of practice, persistence, and a quest for understanding. Whether applying them in image processing, natural language tasks, or future innovations, the impact of neural networks on technology remains profound. Embrace the learning process, and you might just find yourself contributing to this fascinating field!