Have you ever wondered how companies turn raw data into valuable insights? Understanding this process can open up a whole new world of possibilities for you, especially if you’re interested in data science. One crucial step in that journey is data cleaning and transformation pipelines.

What Are Data Pipelines?

Before we get into the nitty-gritty, let’s define what a data pipeline is. At its core, a data pipeline is a series of data processing steps that involve collecting, cleaning, transforming, and moving data from one system to another. These pipelines are essential for businesses that rely on data for decision-making.

Why Are Data Pipelines Important?

Data pipelines play a significant role in ensuring that the data you work with is high quality and usable. Clean and well-structured data allows for better analysis and more accurate insights. Without a proper pipeline, you may find yourself struggling with messy data that can lead to poor decision-making.

The Importance of Data Cleaning

Now, let’s focus on one of the critical components of these pipelines: data cleaning.

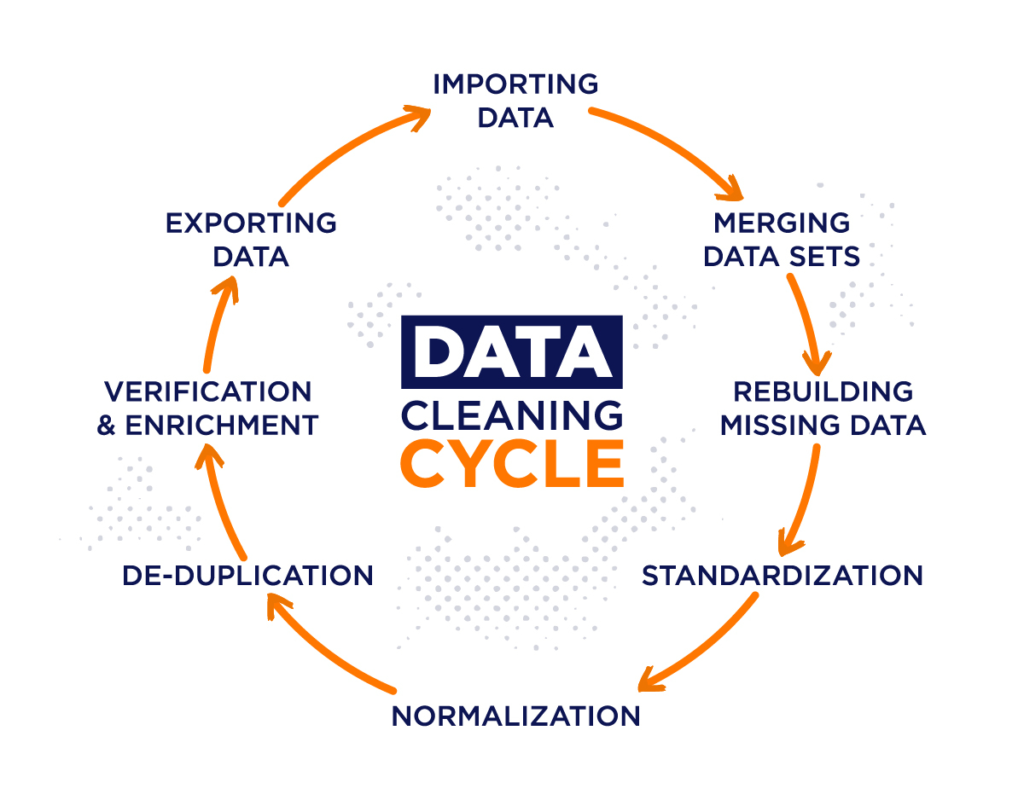

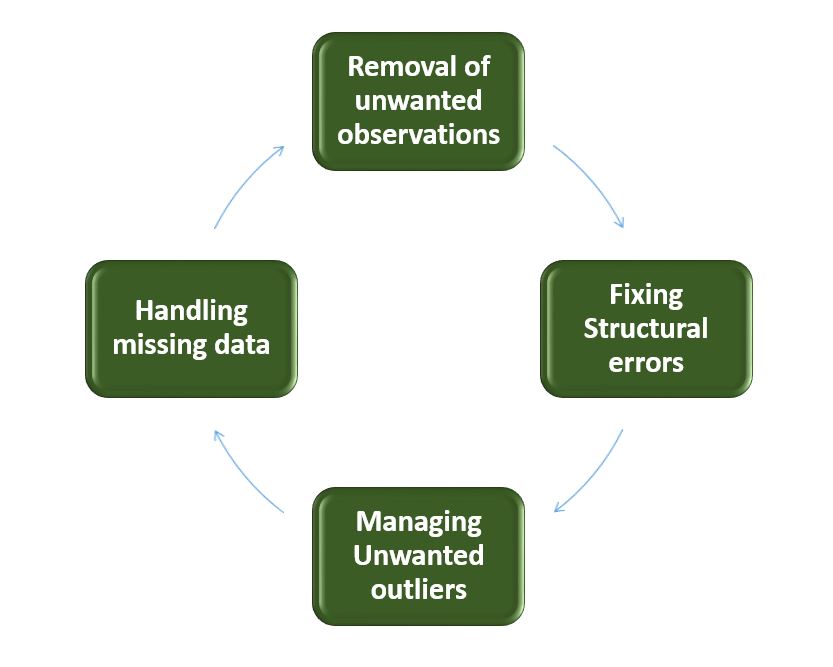

What Is Data Cleaning?

Data cleaning refers to the process of identifying and correcting or removing errors, inconsistencies, and inaccuracies in your data sets. This step is vital because “dirty” data—data that contains errors—can significantly skew your results.

Common Issues in Data Cleaning

There are several common issues that you might encounter during the data cleaning process:

- Missing Values: Often, data sets have missing values that can arise for various reasons, including human error or system failures.

- Duplicate Records: Duplicates can inflate your data size and lead to misleading results.

- Outliers: These are unexpected values that deviate significantly from other observations, which can heavily influence your analysis.

- Inconsistent Formats: Data collected from multiple sources may have different formats, making it difficult to analyze consistently.

Strategies for Effective Data Cleaning

In your cleaning journey, you may find a variety of strategies helpful:

- Automated Scripts: Using scripts in programming languages like Python or R to identify and correct issues can save you a lot of time.

- Data Profiling: This involves examining your data for quality issues before cleaning it.

- Validation Rules: Setting up rules that define acceptable data can help you catch errors early on.

- Peer Review: Having others review your cleaned data can help identify any mistakes you might have overlooked.

Data Transformation: Converting Data into Insight

Once your data is clean, the next step is transformation.

What Is Data Transformation?

Data transformation refers to the process of converting data from its original format into a format that’s more appropriate for analysis. This can involve several methods and techniques.

Common Data Transformation Techniques

- Normalization: Adjusting the values in your data set to a common scale without distorting differences in the ranges of values.

- Aggregation: Summarizing your data to provide useful insights, such as calculating averages or totals.

- Encoding: Transforming categorical data into a format that can be provided to machine learning algorithms, often done through techniques like one-hot encoding.

- Feature Engineering: Creating new features from the existing data to improve the performance of machine learning models.

Why Transformation Matters

Transformed data can provide clarity and insight that raw data often does not. It enables more effective analysis and allows you to leverage advanced algorithms to gain insights that drive decision-making.

Building a Data Cleaning & Transformation Pipeline

Now that you have an idea of what data cleaning and transformation are, let’s put it all together and look at how you can build your own pipeline.

Steps to Create Your Pipeline

Building a data pipeline requires some planning and consideration. Here’s a step-by-step guide:

-

Identify Your Data Sources: Determine where your data is coming from. This could be databases, APIs, or flat files.

-

Collect Data: Use tools and scripts to gather data from identified sources.

-

Pre-Process Data: Begin the data cleaning process by identifying inconsistent formats, missing values, and duplicates.

-

Transform Data: Apply necessary transformations, such as normalizing, aggregating, or encoding, to prepare your data for analysis.

-

Load Data into Destination System: After cleaning and transforming, load your data into a data warehouse or another analysis-ready format.

-

Monitoring and Maintenance: Once your pipeline is operational, ongoing monitoring is necessary to ensure data quality and to address any issues that arise over time.

Tools for Building Your Pipeline

You might find various tools helpful in this process. Here are a few popular options:

| Tool Type | Example Tools | Description |

|---|---|---|

| Data Integration | Apache NiFi, Talend | Tools designed to automate data flows between systems. |

| Data Cleaning | OpenRefine, Trifacta | Specialized tools to clean and prepare data sets. |

| ETL (Extract, Transform, Load) | Apache Spark, AWS Glue | Tools that handle large-scale data processing and orchestration. |

Best Practices for Pipeline Creation

-

Keep It Modular: Break your pipeline into separate, manageable modules for cleaning and transformation. This approach allows for easier debugging and modifications.

-

Documentation: Document each step of your pipeline. Good documentation helps maintain the pipeline over time, especially as team members change or as the codebase grows.

-

Automate When Possible: Whenever you can, automate tasks within the pipeline to save time and reduce the chance of human error.

Real-World Applications of Data Cleaning & Transformation Pipelines

Understanding how data cleaning and transformation pipelines work is one thing, but seeing real-world applications can really emphasize their importance. Here are some scenarios where these processes stand out:

Marketing Analytics

In marketing analytics, companies collect large volumes of customer data from various channels. A robust data pipeline allows for the cleaning of customer data, enabling marketers to segment their audience accurately and personalize campaigns based on clear insights.

Financial Services

For financial institutions, accurate and timely data is essential. A well-structured pipeline helps in cleaning transaction data, thus enabling accurate fraud detection algorithms to be applied effectively.

Health Care

In the healthcare sector, clean and well-transformed data can help improve patient outcomes. Data pipelines can ensure that patient records are consistent and accurate, which is critical for any analytical models that predict patient needs or outcomes.

Challenges in Data Cleaning and Transformation

While the benefits are clear, data cleaning and transformation come with their own set of challenges.

Dealing with Big Data

With the rise of big data, managing cleaning and transformation at scale can become complex. You may need distributed computing frameworks to handle large data sets efficiently.

Data Privacy Regulations

When working with personal data, it’s critical to be aware of data privacy regulations like GDPR. Always make sure your data practices are compliant, especially when it involves transformation processes that may inadvertently create identifiable information.

Continuous Change

Data is rarely static. It evolves over time, which means your pipelines must be adaptable. When new data sources are integrated, you need to ensure that your cleaning and transformation processes keep up with the changes.

Summary

Data cleaning and transformation pipelines are fundamental to effective data science practices. By properly managing these pipelines, you ensure that the data you work with is of high quality, which in turn leads to more reliable insights and better decision-making.

You’ve learned about the significance of data pipelines, the processes of cleaning and transformation, and how to build your own pipeline for successful data management. Utilizing these practices not only enhances the quality of your data but also opens the door for advanced analytics and model-building.

Equipped with this knowledge, you’re now better prepared to take on your own data challenges. Embrace the journey of working with data; there’s a wealth of information waiting to be uncovered!